Welcome to my homepage! I am Changyue Wang (王畅越), a second-year PhD student at the Department of Computer Science and Technology, Tsinghua University, under the supervision of Prof. Yiqun Liu.

My research interests focus on knowledge utilization in large language models (LLMs), including knowledge editing, hallucination detection, retrieval augmentation, and legal applications of AI.

Currently, I am exploring how to enable LLMs to better memorize and utilize memory.

Beyond research, I also run a popular Xiaohongshu account, @LLM翰林院 , a daily paper bot that automatically curates and summarizes the latest AI papers on arXiv. It has attracted over 17,000 followers so far, providing researchers and enthusiasts with up-to-date insights into the rapidly evolving field of LLMs.

🔥 News

- 2025.11: 🎉🎉 My first-authored Long Paper RACE has been accepted at AAAI 2026 as an Oral Presentation!

- 2025.08: 🎉🎉 My first-authored Long Paper EditCoT has been accepted at EMNLP 2025!

- 2025.05: 🎉🎉 My first-authored Long Paper DecKER has been accepted at Findings of ACL 2025!

- 2024.09: 🎉🎉 My first-authored Long Paper LeKUBE has been accepted at SIGIR-AP 2024!

- 2024.05: 🎉🎉 My co-first-authored Long Paper MIND has been accepted at Findings of ACL 2024!

- 2023.05: 🎉🎉 Our team participated in COLIEE 2023, achieving the 1st place in Task 1 (Report) and the 2nd place in Task 2 (Report).

🎖 Honors and Awards

- Nov. 2025, AAAI 2026 Oral.

- Jun. 2024, Outstanding Undergraduate Graduate of Beijing.

📖 Educations

- Sep. 2024 - present, Ph.D. student in Department of Computer Science and Technology, Tsinghua University, China.

- Sep. 2020 - Jun. 2024, B.Eng. in Department of Computer Science and Technology, Tsinghua University, China.

- Sep. 2020 - Jun. 2024, B.S. (Minor) in Statistics, Tsinghua University, China.

- Sep. 2020 - Jun. 2024, Xinya College, Tsinghua University, China.

⛷️ Paper Under Submission

📝 Publications

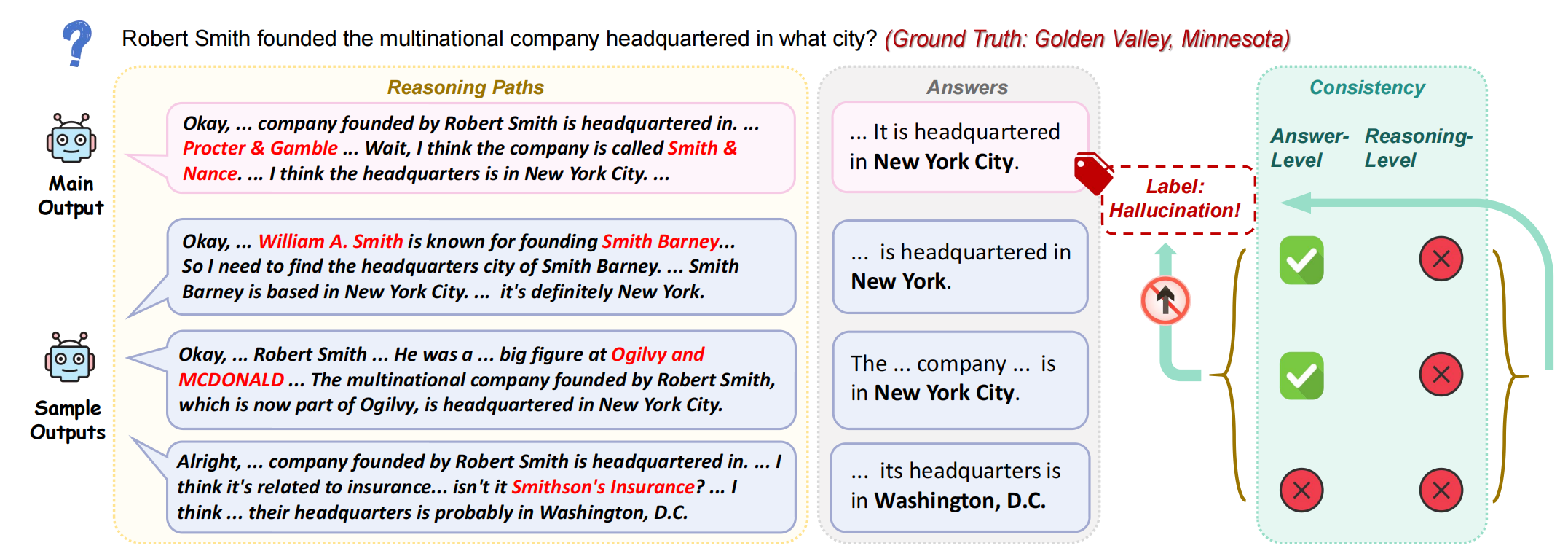

Changyue Wang, Weihang Su, Qingyao Ai, Yiqun Liu

- RACE (Reasoning and Answer Consistency Evaluation) is a framework for detecting hallucinations in Large Reasoning Models (LRMs) by jointly analyzing both reasoning traces and final answers. It detects inconsistencies and hallucinations through multi-signal analysis, achieving robust and generalizable performance across models and datasets. RACE is the first to reveal that prior black-box hallucination detection methods are fundamentally flawed when applied to Large Reasoning Models (LRMs), and pioneers the direction of black-box hallucination detection for LRMs.

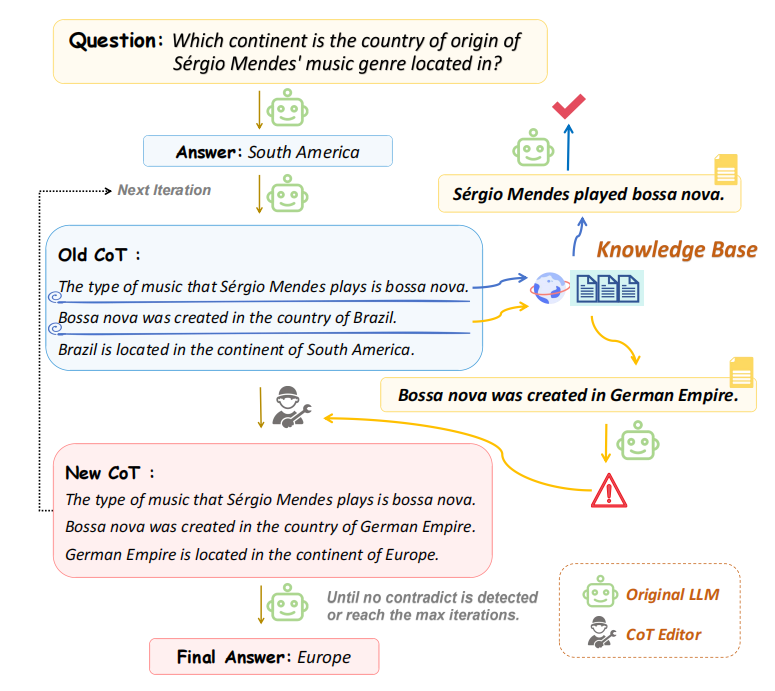

Knowledge Editing through Chain-of-Thought

Changyue Wang, Weihang Su, Qingyao Ai, Yichen Tang, Yiqun Liu

- EditCoT is a novel knowledge editing framework that updates LLMs through iterative chain-of-thought refinement, enabling efficient integration of new knowledge without retraining. It achieves state-of-the-art performance across diverse tasks and languages, offering superior generalization, stability, and effectiveness.

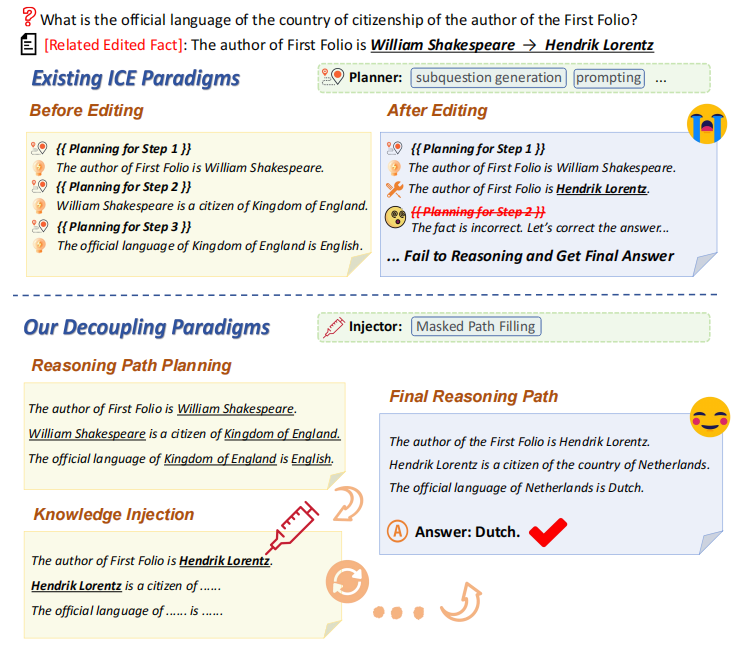

Decoupling Reasoning and Knowledge Injection for In-Context Knowledge Editing

Changyue Wang, Weihang Su, Qingyao Ai, Yujia Zhou, Yiqun Liu

- DecKER is a novel in-context editing framework that decouples reasoning from knowledge injection, mitigating conflicts between updated and original knowledge. It achieves significant improvements in multi-hop reasoning by preserving reasoning integrity while efficiently integrating new knowledge.

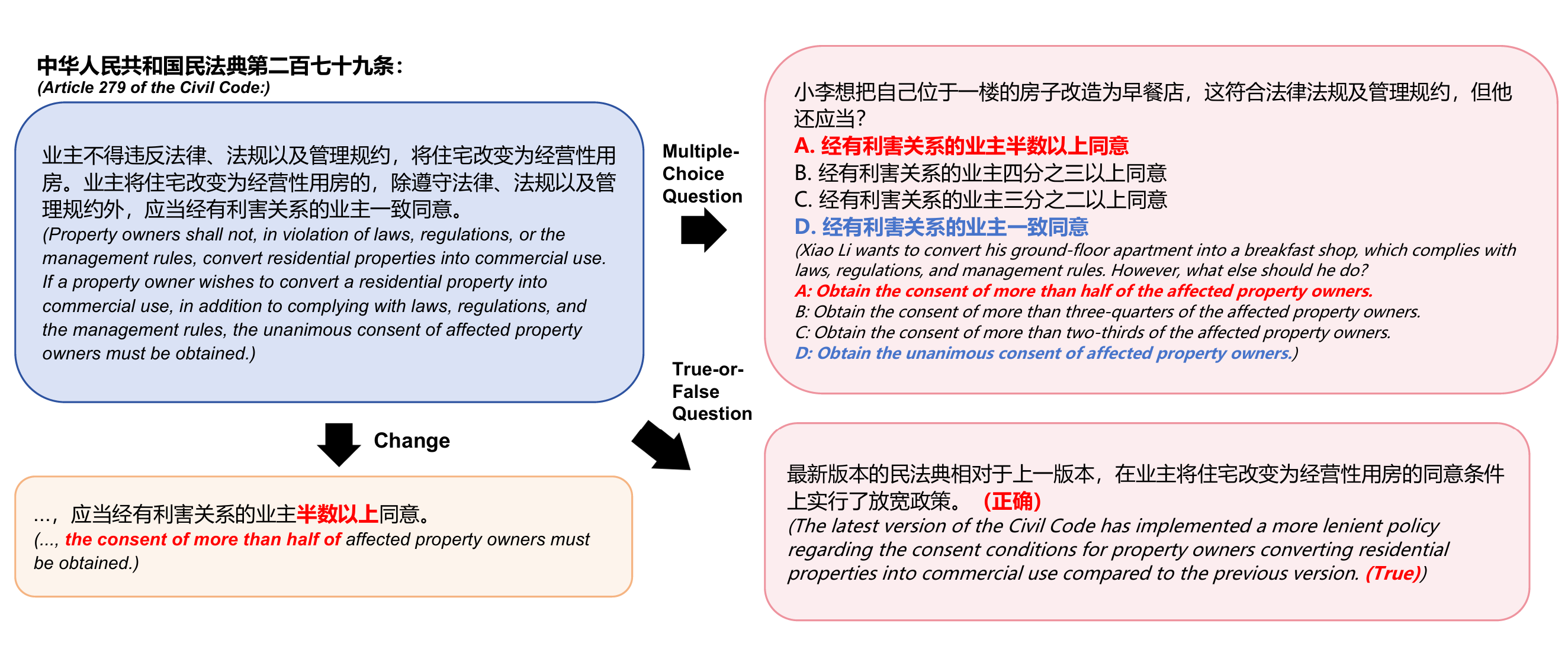

LeKUBE: A Knowledge Update BEnchmark for Legal Domain

Changyue Wang, Weihang Su, Yiran Hu, Qingyao Ai, Yueyue Wu, Cheng Luo, Yiqun Liu, Min Zhang, Shaoping Ma

- LeKUBE is a comprehensive benchmark designed to evaluate knowledge update methods for legal LLMs. It highlights the unique challenges of updating legal knowledge—such as nuanced statutory changes and complex reasoning—revealing a significant gap between current techniques and real-world legal needs.

Unsupervised real-time hallucination detection based on the internal states of large language models

Weihang Su*, Changyue Wang*, Qingyao Ai, Yiran Hu, Zhijing Wu, Yujia Zhou, Yiqun Liu

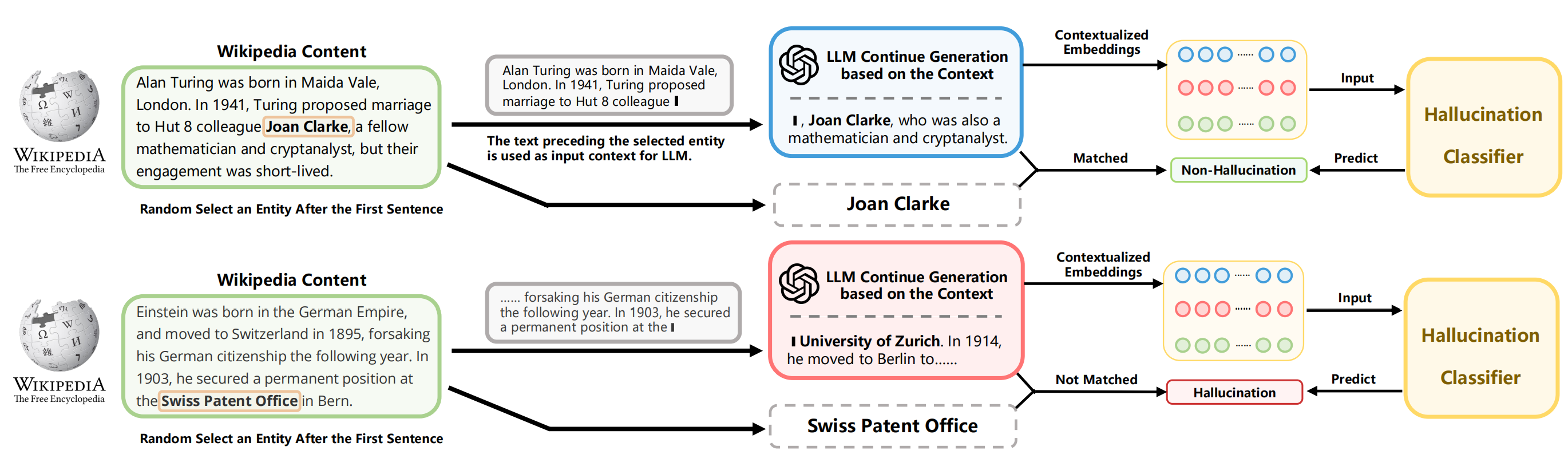

- MIND is an unsupervised framework that detects hallucinations in LLMs by leveraging their internal states during inference for real-time analysis. Alongside, HELM provides a comprehensive benchmark to evaluate hallucination detection across diverse models and scenarios.

- Parametric Retrieval Augmented Generation, Weihang Su, Yichen Tang, Qingyao Ai, Junxi Yan, Changyue Wang, Hongning Wang, Ziyi Ye, Yujia Zhou, Yiqun Liu. SIGIR 2025

- JuDGE: Benchmarking Judgment Document Generation for Chinese Legal System, Weihang Su, Baoqing Yue, Qingyao Ai, Yiran Hu, Jiaqi Li, Changyue Wang, Kaiyuan Zhang, Yueyue Wu, Yiqun Liu. SIGIR 2025

- Pre-training for Legal Case Retrieval Based on Inter-Case Distinctions, Weihang Su, Qingyao Ai, Yueyue Wu, Anzhe Xie, Changyue Wang, Yixiao Ma, Haitao Li, Zhijing Wu, Yiqun Liu, Min Zhang. ACM TOIS

- Mitigating Entity-Level Hallucination in Large Language Models, Weihang Su, Yichen Tang, Qingyao Ai, Changyue Wang, Zhijing Wu, Yiqun Liu. SIGIR-AP 2024